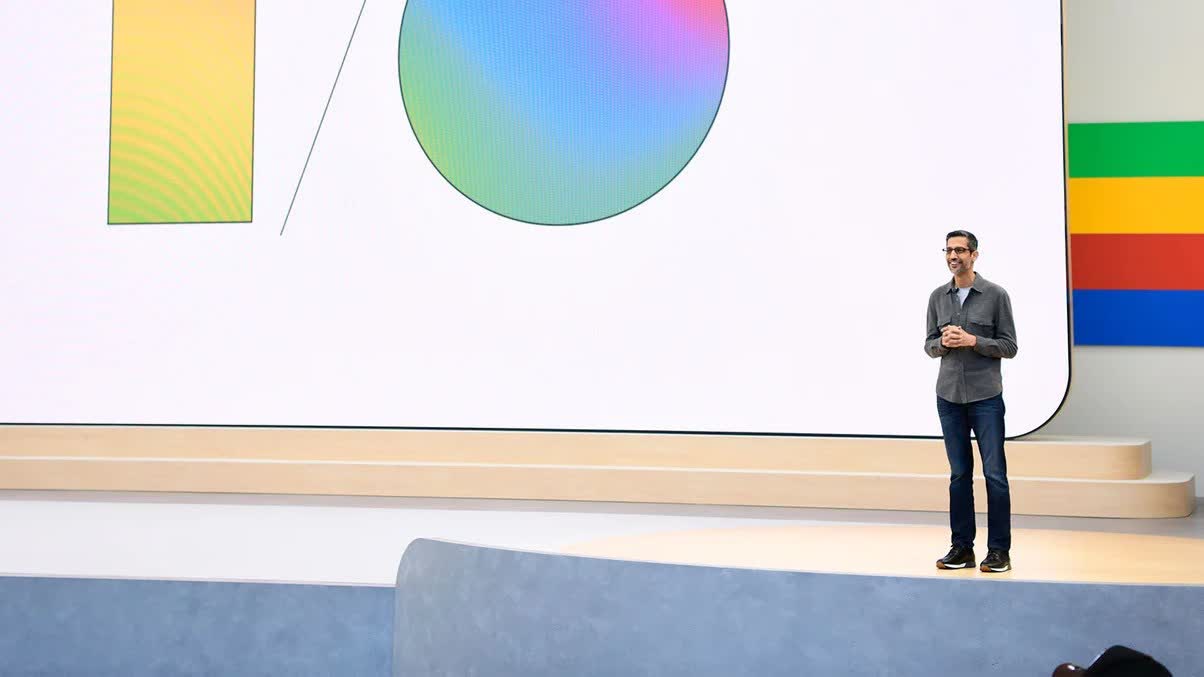

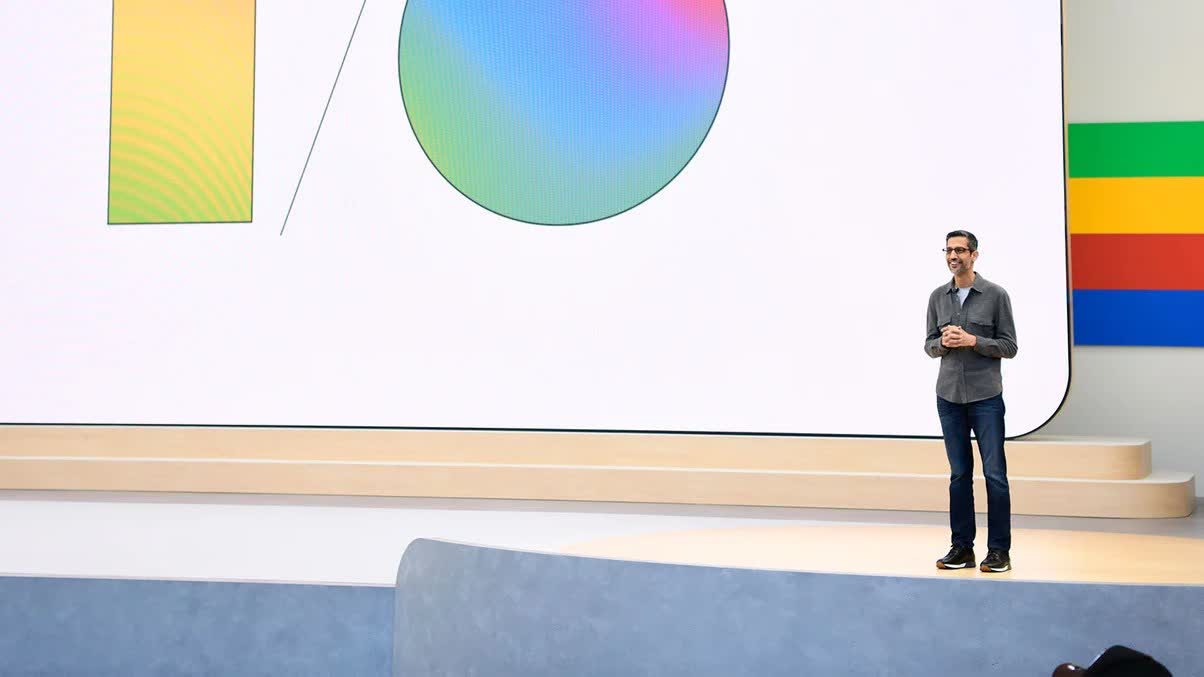

What just happened? Google’s deep dive into generative AI has led to the company integrating the technology into its search engine responses. This integration is now live, with Google harnessing its Gemini AI to structure and contextualize search results. However, this advancement has not been without its challenges, including concerns over AI inaccuracies from the outset.

Google has introduced AI-generated summaries in search results for users in the US, aimed at enhancing the engine’s ability to tackle complex inquiries. This feature is expected to expand globally in the months to come, targeting a billion users by the end of 2024.

Utilizing Google’s Gemini AI, previously known as Bard, the search engine can now provide elaborate answers to multifaceted questions. Demonstrations reveal the AI offering multi-step solutions to search queries, potentially reducing the need for multiple searches by users.

Following an initial result, the feature enables users to modify their queries slightly, promoting a dialogue with Gemini to refine their search results. An upcoming update promises the addition of requesting simpler or more expansive answers.

In response to the apprehension that AI Overviews might siphon traffic from websites by replicating their content on the results page, Google insists that this mechanism actually directs users to a broader array of websites. Although test findings suggest an increase in clicks for sites mentioned in AI Overviews, this assertion has been met with skepticism, failing to address the ethical concerns of using websites’ content without permission.

Referring to Google’s blog post example – evidently reviewed before publication – we encounter AI-generated answers leading to more AI responses.

This is indeed an unusual development. pic.twitter.com/CKG3kX3PDD

– Glen Allsopp �’� (@ViperChill) May 14, 2024

Nevertheless, shortly after the announcement, it was highlighted that the AI frequently extracts information from Quora, which itself relies on AI, leading to a scenario where AI may end up sourcing from another AI. This feedback loop raises concerns over the credibility of the information provided.

Moreover, The Verge identified a significant mishap in one of the demo presentations of Gemini, wherein the AI misadvised on handling a camera with film, overlooking the need to perform such an action in a dark room to avoid ruining the film. This oversight highlights the ongoing issue of accuracy and reliability in generative AI outputs.